Rethinking AI: Unlocking business value, shaping humanity's future

xUnlocked

AI offers many opportunities. But could it shape a more purposeful future for humanity?

How can we understand AI’s true potential, and impact, on the future of business and humanity?

This was the theme of a compelling Sustainability Unlocked keynote presentation, hosted by Blue Earth Forum at London Action Climate Week. Our guest speaker, Dr. Daniel Hulme, Chief AI Officer at WPP and CEO of Satalia, challenged conventional notions of AI, offering a vision of a profound AI framework that will radically reshape our world as we know it.

Moving beyond many of today’s conversations, Daniel argued that AI's real power lies not merely in mimicking human capabilities. Instead, we should focus on its capacity for "goal-directed adaptive behaviour" – ie, its ability to continuously learn around good or bad decisions, and adapt to optimise ways to meet complex objectives.

This perspective shifts the focus. Instead of simply extracting insights, AI becomes central to making superior, data-driven decisions that can unlock unprecedented value and address some of the world's most pressing challenges, including climate change.

In this article, we summarise just a few of Daniel’s thoughts, offering a fresh lens through which to view AI's transformative role for both the future of work, and society.

Key themes: AI’s evolution, applications, and societal implications

The evolution of AI capabilities

Daniel gave a brief overview of the history of AI, providing context for the rapid evolution we see now in the application of AI tools. He explored:

- Early AI (1960s-70s): Focused on inferring new knowledge from known facts (Socratic method, now called agentic computing).

- Neural networks (1980s-90s): Modeled on the human brain, leading to current Large Language Models (LLMs).

- LLM progression: The rapid advancement of LLMs from "intoxicated toddlers" (regurgitating nonsense) to "intoxicated graduates" (ChatGPT, 50% nonsense), and their predicted evolution to "Master's level" (rudimentary reasoning), "PhD level" (complex problem-solving), and by the end of the decade, "Professor level" (asking novel questions and pushing the boundaries of human knowledge).

Crucially, Daniel stressed that even at Professor level, AI cannot solve complex optimisation problems directly. But it could build or utilise algorithms to do so, emphasising that LLMs are not a panacea for all problems.

Redefining intelligence and decision making

Within that rapid evolution, Daniel explored ways in which we should think about AI, arguing against the common definition of AI "getting computers to do things that humans can do".

Humans are not the pinnacle of intelligence, he explained, especially in complex decision-making. Human cognition, while adept at pattern recognition in approximately four dimensions and solving problems with up to seven variables, inherently struggles when faced with greater complexity. This limitation often leads to poor outcomes, as human decision-making is bounded by cognitive biases and processing capacity.

In stark contrast, AI systems can identify patterns across "thousands of dimensions" and resolve problems involving "thousands of moving parts". This fundamental difference allows AI to tackle exponentially complex challenges that are intractable for human minds or even inefficient algorithms.

For instance, optimising 200,000 daily deliveries for a major supermarket, or allocating 5,000 auditors for a Big Four accountancy firm would be far too complex a problem for any human to ever solve. But AI, using the right algorithms, can achieve the required computation in hours.

Six core applications of AI in business

This led on to the exploration of the business impact of AI, splitting implementation into six categories:

- Task automation: Offering significant value through simple "if-then" statements, macros, and Robotic Process Automation (RPA) to manage repetitive, mundane tasks.

- Content generation: Moving beyond generic content to brand-specific, production-grade assets (imagery, text, video). This involves making AI "brains" smart through better prompts, brand guidelines, expert tuning, and "agentic computing" (collaborating AI experts).

- Human representation: Building AI models that recreate how people think and feel, moving beyond traditional demographic data to understanding deeper values and predicting behavioral change. This also includes using AI to identify biases (for example, creating a "green-washing brain" to help vet content).

- Insight extraction (machine learning): The true power lies in explaining why predictions are made, enabling humans to make better decisions based on surfaced insights.

- Complex decisions (optimisation): Applying advanced algorithms to problems with an astronomical number of variables (for example, allocating 500 staff to 500 jobs, where solutions exceed the number of atoms in the universe). The result would lead to massive efficiency and carbon reductions.

- Human augmentation: Creating "digital twins" of employees (trained on digital footprints) to optimise project allocation and ensure job satisfaction. This extends to personal AI agents that learn preferences and can act as purchasing engines, ensuring purchases align with personal values (for example, sustainably sourced goods), and holding brands accountable.

Ultimately, Daniel talked of the creation of comprehensive "digital twins" encompassing entire supply chains — the flow of goods, back-office processes, and the workforce itself — which would enable organisations to move from rigid hierarchies to agile, adaptive structures, fostering innovation, and better talent allocation.

Navigating AI risks: Safety, security, ethics, and governance

As part of his exploration of AI’s huge potential, Daniel also explored related risks, asking three questions to challenge AI assumptions:

- Is talk of AI ‘ethics’ appropriate? Ethics imply intent, but intent is distinctly human, he argued. If AI is merely a tool without its own will or moral compass, then any ethical "failure" or "success" would ultimately reflect the human intent behind its design, deployment, and oversight — ie, AI ethics means human ethics applied to AI development and use. In the future, Daniel conceded that more advanced AI could develop intent, but this would shift thinking entirely, into building human values and morality into AI, rather than trying to create AI guardrails.

- Are algorithms explainable? The fundamental difference between traditional software and AI often lies in AI's inherent opacity regarding its decision-making processes. But if algorithms can be designed to articulate how they arrive at their decisions, then many concerns surrounding AI's "black box" nature are resolved, fostering transparency and trust.

- What happens if AI goes very right? In Daniel’s view, an often overlooked, but real, risk of AI is if it surpasses expectation, which could cause unforeseen harm. For example, using AI to increase fee-earner utilisation in a professional service firm could massively increase billable hours. But too successful, and it could create issues in other parts for the supply chain not equipped for the shift. Similarly, a human bias, called homophily, means we tend to like and trust people that look and sound like us. But if we let AI loose on optimising marketing through that frame, it could enforce bias and bigotry in social bubbles.

The six AI singularities (macro risks)

Using the PESTLE framework, a tool for analysing macro-environmental factors, Daniel outlined six major singularities that could impact society and humanity:

- Political: A post-truth world where misinformation and deepfakes erode the fabric of reality (for example, AI-voice cloning to ferment social unrest or commit fraud).

- Environmental: AI increases energy consumption, worsening the climate/water crisis. (Although, paradoxically, AI’s proper application could optimise supply chains to drastically reduce energy needs and carbon emissions, potentially halving planetary energy requirements.)

- Social: The potential to "cure death" through AI-driven medical advancements, raising profound questions about a world with extended lifespans.

- Technological: The creation of superintelligence (AI millions of times smarter than humans), necessitating the development of conscious, empathetic, and human-aligned AI.

- Legal: Ubiquitous surveillance and the challenge of preventing bad actors from using AI to accumulate power and wealth.

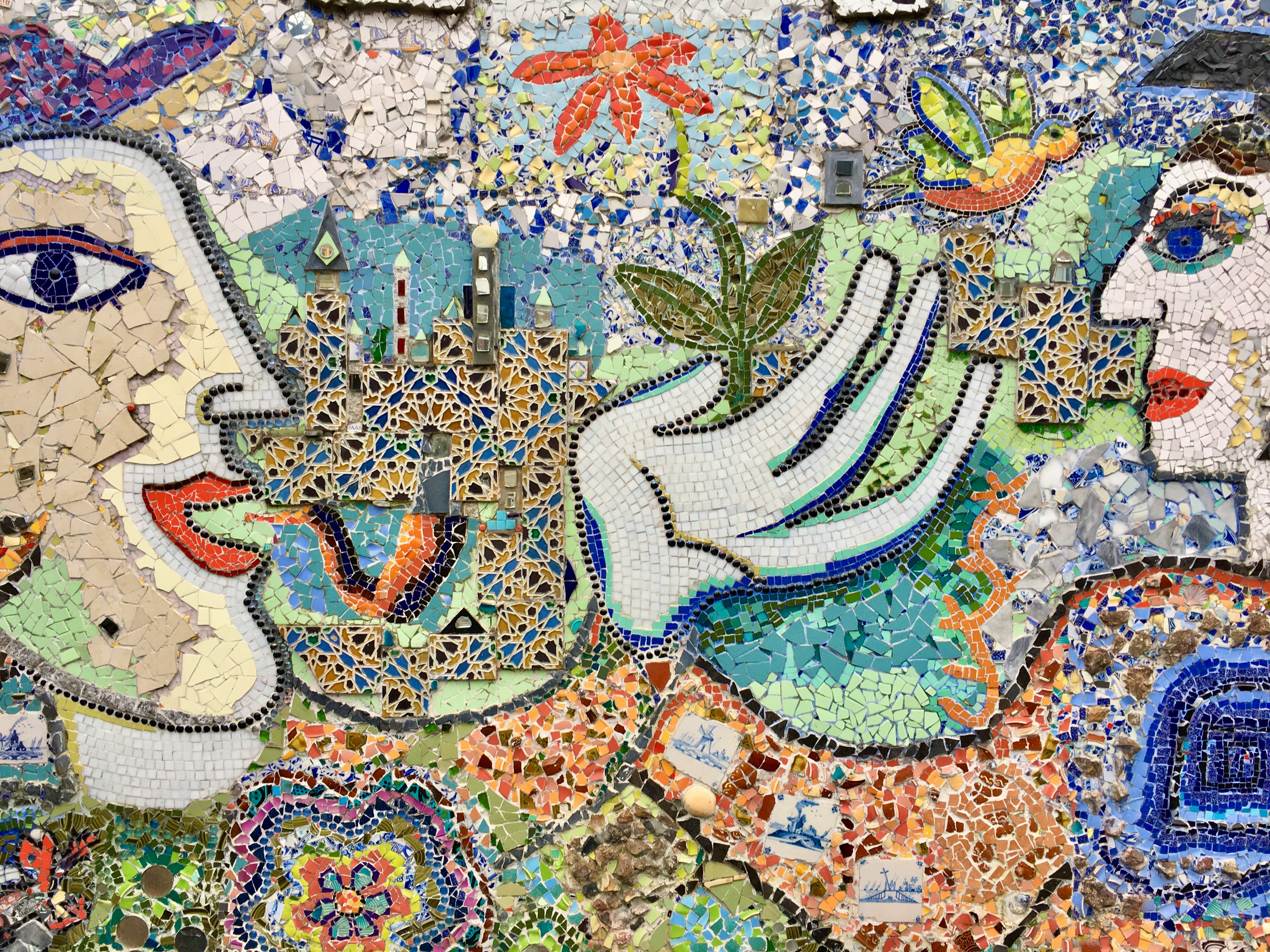

- Economic: The automation of the majority of human labour. This could, at least in the short term, lead to social unrest. But a "protopia" could be envisaged in which the cost of essential goods (food, healthcare, education) becomes negligible, freeing humanity from economic constraints to pursue creativity and more meaningful life experiences.

Future vision: The next frontier of AI

Daniel’s presentation offered a refreshing and deeply insightful perspective on AI, urging a move beyond simplistic definitions, and towards a holistic understanding of its adaptive and transformative power. He emphasised that AI, when applied with appropriate intent and explainable algorithms, can drive unprecedented efficiencies, foster innovation, and address complex global challenges like climate change.

The major obstacle of energy inefficiency in LLMs could be resolved with innovation in “neuro-morphic computing”, he suggested, (which mimics energy-efficient human brain spikes) to minimise and massively reduce energy consumption (in this respect, also see our recent insight featuring Rahul Bhusan’s thoughts on the AI-energy partnership of the future).

Daniel also envisaged a world in which we shift from Generative AI to Agentic AI (collaborating agents), and eventually to Physical AI — ie, robotics.

Ultimately, Hulme's vision points towards a future in which purpose-driven organisations, leveraging AI, can unlock human creativity and enable individuals to live beyond economic constraints, contributing to a "protopia", a continuously improving world.

This profound shift in thinking provides considerable food for thought for leaders and innovators across all sectors, as we continue to navigate the intertwined future of data, sustainability, and humanity.

How xUnlocked supports businesses to navigate change

At xUnlocked, we believe that today’s most significant disruptions — from AI to climate change — are not just challenges to overcome, but opportunities to lead. That’s why we’ve created Sustainability Unlocked and Data Unlocked, platforms designed for businesses that want to move beyond reactive thinking and into a future where sustainability and advanced technologies like AI work hand in hand to create lasting value.

We partner with companies to equip their workforces with the sustainability and data skills they need to thrive amid transformation. By demystifying complex issues and translating them into targeted, role-relevant learning pathways, we help leaders and teams alike move from uncertainty to action.

Our content, co-created by global experts, supports both deep expertise and broad organisational engagement, whether you're aligning AI with sustainability goals, rethinking value chains, or building the internal capability to spot opportunities where others see only risk.

We are trusted partners in change. We create the skills infrastructure that empowers your people to shape the future — and gives your business the resilience, insight, and momentum to lead it.

Contact us now and find out how xUnlocked could help your business.

xUnlocked

Share "Rethinking AI: Unlocking business value, shaping humanity's future" on

Latest Insights

Are knowledge gaps or disparities blocking your sustainability success?

10th June 2025 • Maria Coronado Robles